On the 21st I output an article "What is Technical SEO", so simply follow the theme of technical SEO to continue to output a bar, and talk about how to do technical optimization of the site.

For people without technical background, some concepts may be the first time to contact, so I try to write a little more commonly, to avoid the "curse of knowledge". If you do not understand, it is entirely my ability to the problem, you can leave a message at the bottom of the article, and I reply one by one.

To do a good job of technical SEO optimization of the site, generally involves several steps, respectively: checking the page, submitting included, crawling the site, and data monitoring.

Without further ado, start directly.

1. Checking the Page

Before preparing to submit the page to Google for inclusion, it is best to check whether the website page is in line with the search engine's inclusion criteria. Two of the core factors are page status and page elements.

Page status is relatively simple, in technical terms the so-called server response code. Usually, 200, 301, 404, 500, etc., do not need to care too much about the technical principles, as long as you know the concept can be.

Here to share a list of server response codes and their meaning (information from the rookie tutorial).

|

| Server Response Code |

In addition to the success of the 200 request, other response codes should be handled in a timely manner, such as the common 301, 404 and other codes must be fixed as soon as possible.

Another factor is the page elements, that is, the HTML tags of the page. Usually there are H tags, Meta tags, Schema tags and so on.

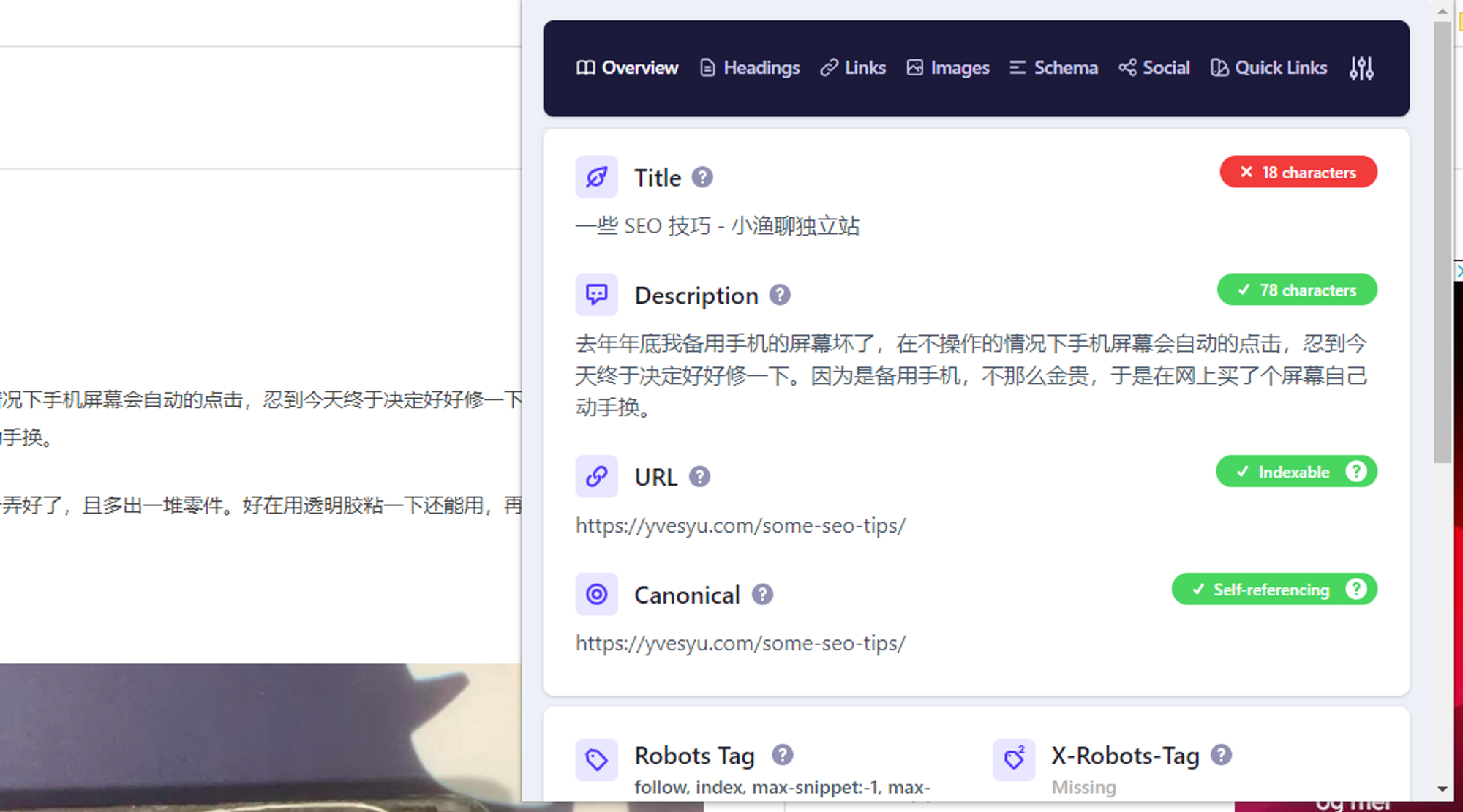

Before submitting a page for inclusion, it is best to check if there is a problem with these page tag elements. We recommend using Detailed SEO Extension, a plug-in for Google Chrome.

|

| Detailed SEO Extension |

The use of this plug-in can be very convenient to check the details of various aspects of the site page whether there are defects, found problems in time to repair, repair completed and then submitted for inclusion, so as not to do some useless work.

2. Submitting Included

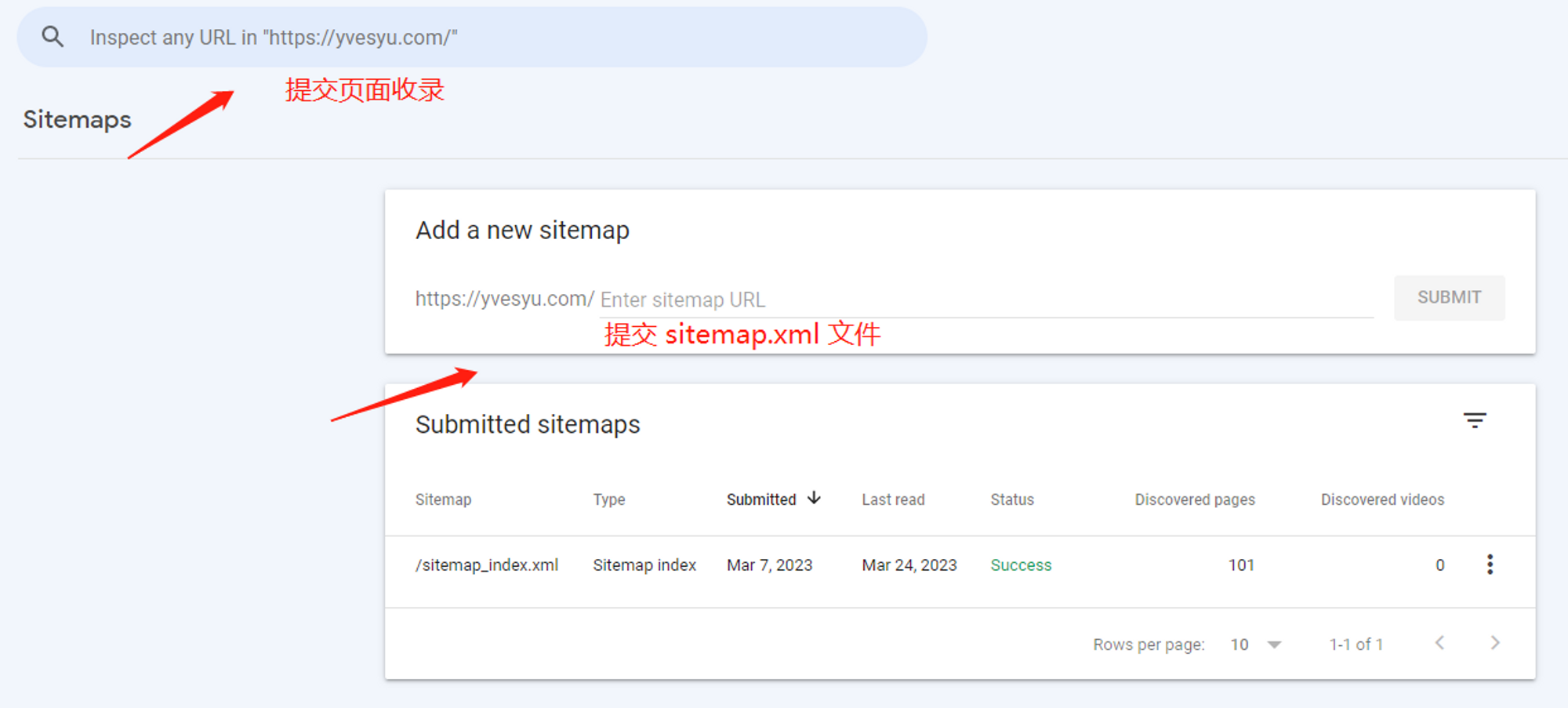

Submit a website or page to Google Webmaster Tools before, be sure to set up a good website sitema.xml file and robots.txt file, with these two files, search engine crawlers will know "this page let me crawl", "should be in accordance with what directory crawl".

To learn more about these two files, you can read my article written a long time ago: "Robots:

What is the Robots.Txt file?

What is a Sitemap (XML Sitemap)?

One way to create these two files on your website is to write them manually according to the corresponding syntax, but I don't recommend it because it's a lot of work. Instead, I prefer to install RankMath, an SEO plugin that automatically generates and manages these two files.

To learn more about how to use RankMath SEO plugin, you can read my previous article "WordPress SEO Plugin Rank Math Guide".

After these two files are set up, we submit them directly in Google Webmaster Tools, and the rest is to wait patiently for the search engine to crawl. But generally brand new domain name, nothing weight, speed and crawl amount are limited.

|

| Sitemap Submit |

3. Crawling the Site.

An excellent structure of the site is more popular with search engines, so at the beginning of the site we have to think about how the page layout, URL how to flatten the design, the top and bottom of the navigation bar how to optimize, sidebar breadcrumbs these small things how to show and so on.

These basic skills to do a good job, the subsequent crawlers in the search engine crawling time to get twice the result with half the effort.

Here shows a good website structure schematic.

|

| Site Structure |

For more information on site structure, check out this How to Beat High-Authority Sites with Fewer Links Using proper SEO Silo Structure article, it's much better than what I wrote.

4. Data Monitoring

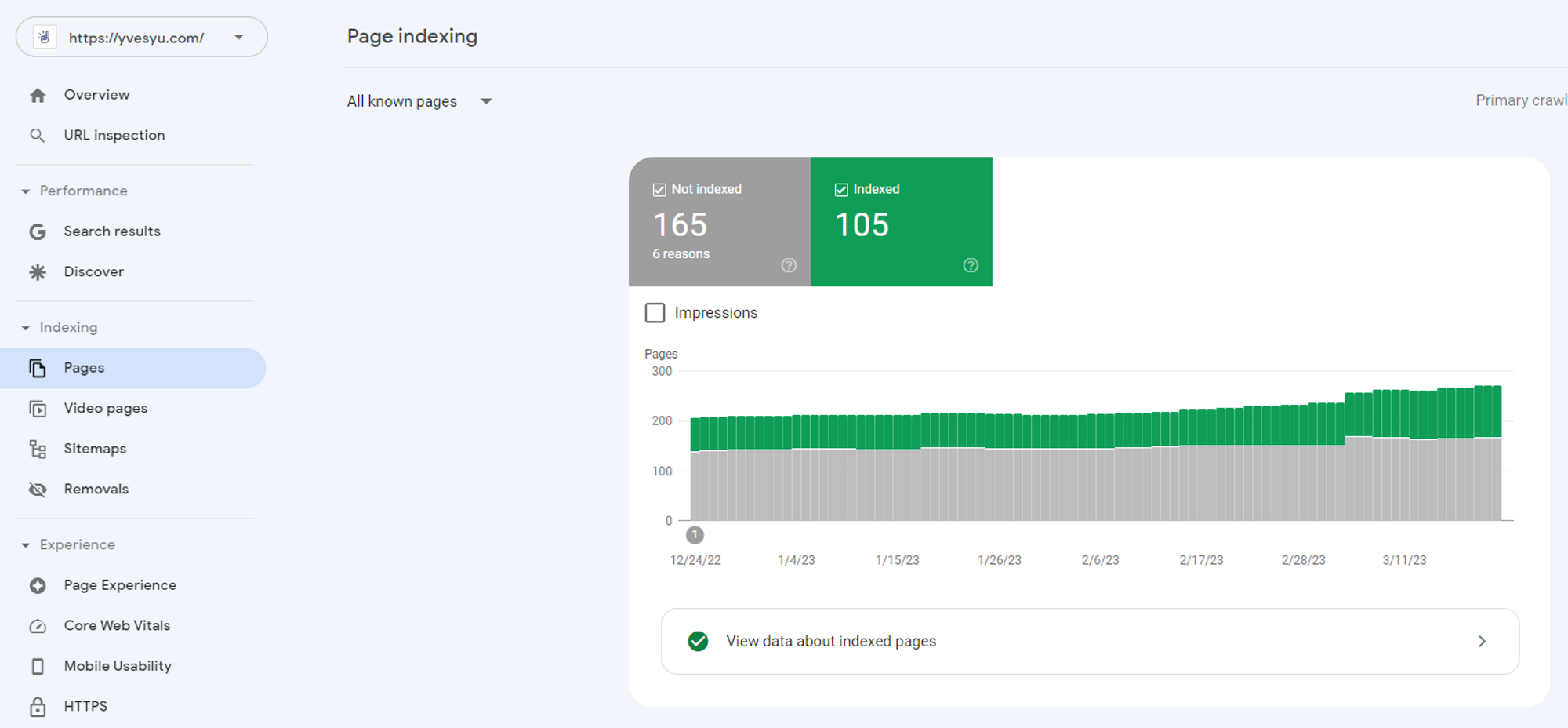

To monitor the website, you only need to use the official webmaster tools, you can regularly check the page collection and feedback of the corresponding problems in the GSC backend, and modify the problems in time.

You can click "Page" to check the page inclusion.

|

| GSC Page |

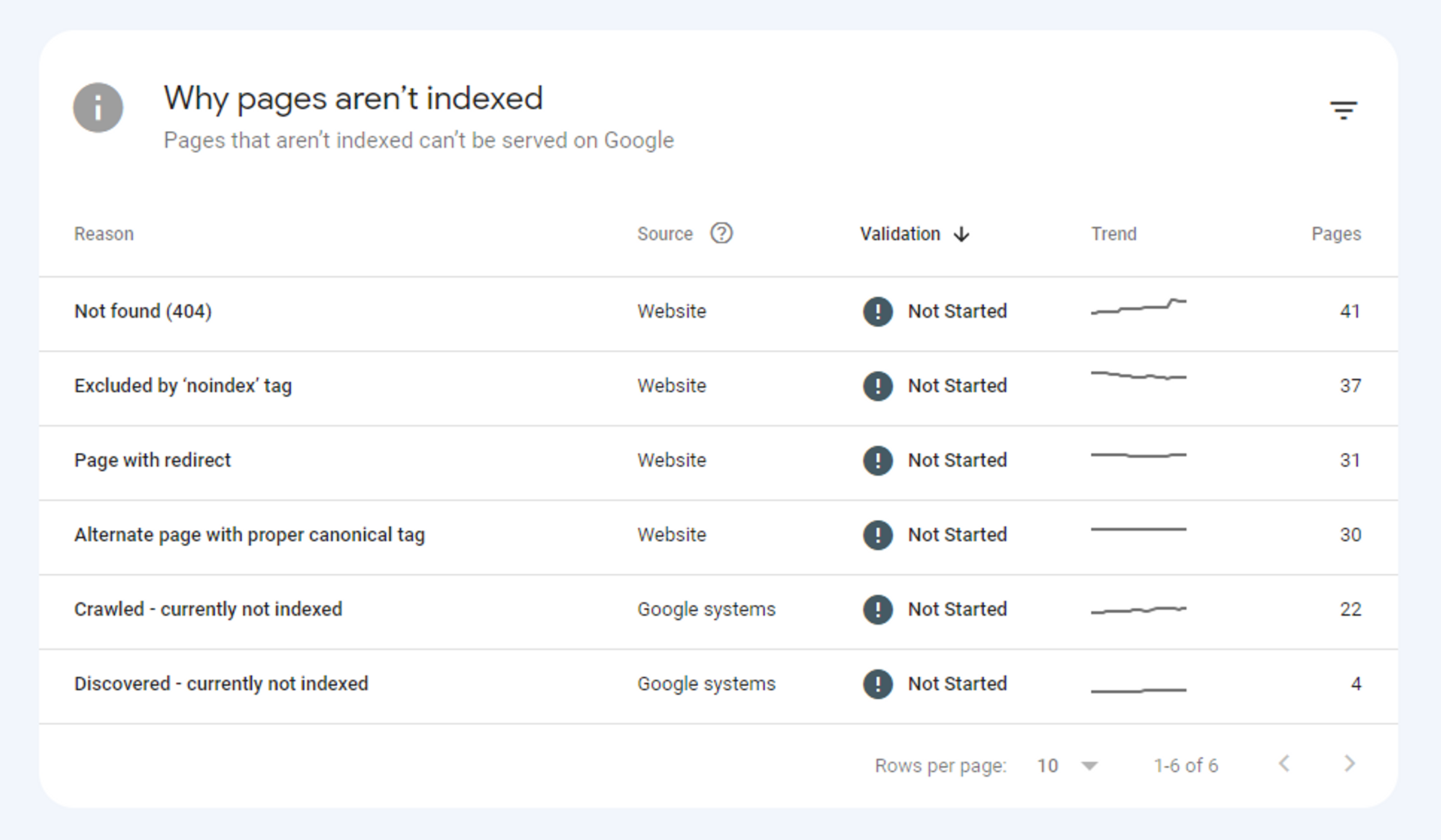

You can also further explore the reasons why the page was not included, you can click to the specific categories inside to see which pages were not included.

|

| Index Failed Reason |

Even further, we can take a look at the search engine crawler's crawl report on my website.

|

| Crawling Report |

Go to the specific report to visualize the crawling of search engine crawlers.

|

| Crawling Details |

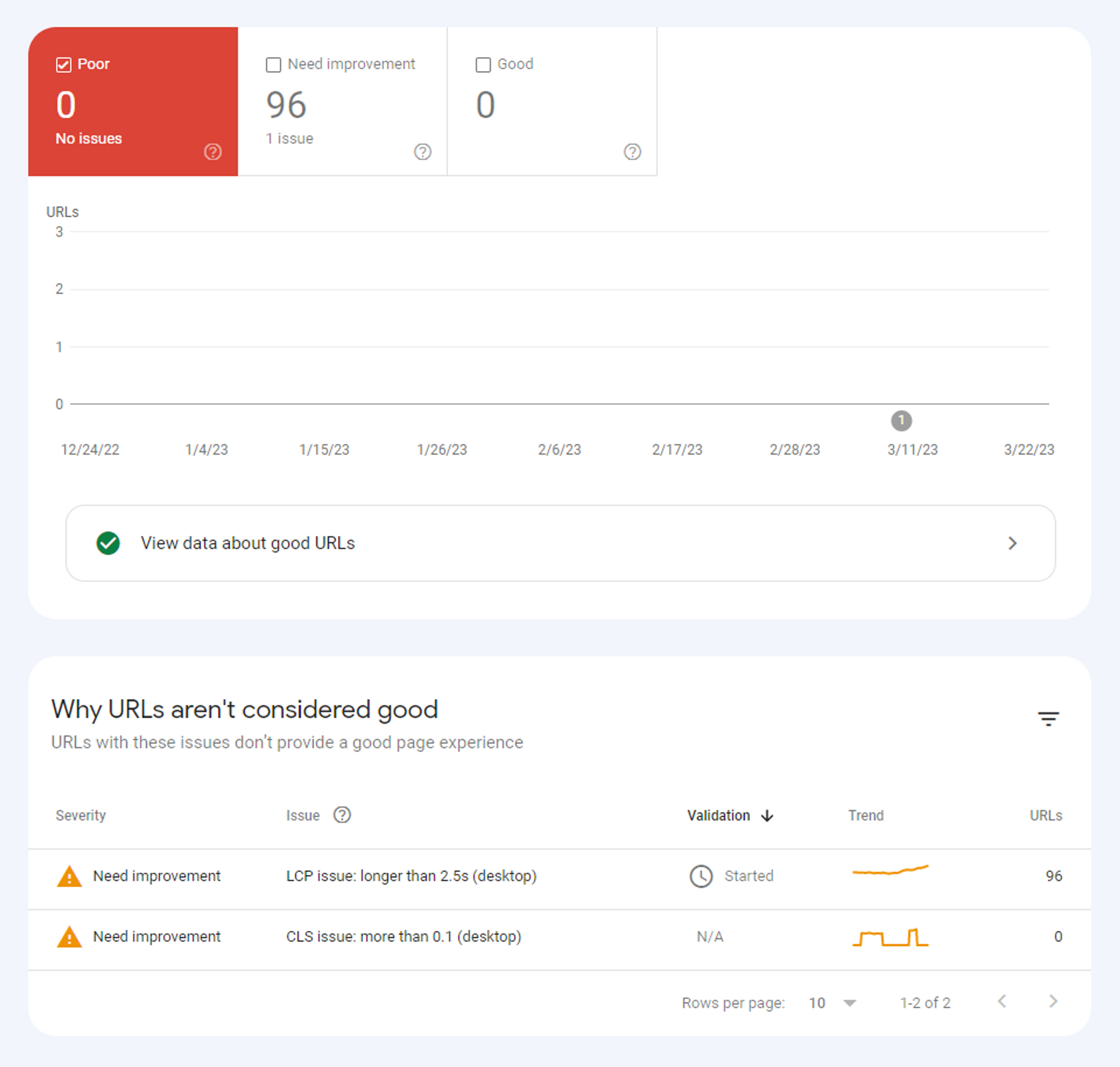

There is also a focus on checking the core web metrics, which is a set of criteria developed by Google specifically for measuring user experience, and we can use this metric to understand Google's view of our website's user experience.

|

| Core Web Vitals |

Check the specific report found that the main problem is the LCP and CLS problems, mainly is my blog loading speed in about 3 seconds, did not reach Google's 2.5 seconds standard, and do not intend to invest resources to accelerate.

About LCP and CLS later to say it, need a long discussion.

|

| CWV Report |

Website data monitoring mainly involves these elements, good technical SEO on the main look at these data indicators.

I hope these contents have some help.

Comments

Post a Comment